Imitation Learning of a Robust Policy for an Aerial Robotic System Incorporating Data Aggregation by Closed Loop Sensitivity

Physical interaction with the environment poses a significant challenge for controlling Unmanned Aerial Vehicles (UAVs). Unlike free-flight tasks, contact interactions introduce nonlinear and unpredictable dynamics. While Reinforcement Learning (RL) has shown promise for such tasks, it typically relies on extensive simulation to avoid damaging the real system. A more direct approach is to start RL with an initial policy learned through Imitation Learning (IL), which can be derived from expert demonstrations.

This project aims to develop a control policy for aerial physical interaction by leveraging recent advances in IL. The approach will build on sensitivity-based data aggregation, allowing for the use of a small number of expert demonstrations. The learned policy will then serve as an initial candidate for a model-based RL algorithm, enabling direct policy learning on the real robot. The outcome will be a robust policy for push-and-slide interaction tasks.

- Literature Review: Study recent advances in Imitation Learning for UAV control, with a focus on closed-loop sensitivity and data aggregation.

- Implementation of Expert Controller: Develop a force/impedance control expert for aerial physical interaction.

- Design of IL Pipeline: Design an Imitation Learning pipeline based on the literature, incorporating data aggregation using closed-loop sensitivity.

- Implementation: Implement the IL pipeline in Python, ensuring compatibility with the telekyb3 framework.

- Validation: Simulate and test the learning strategy in a simulated environment, then deploy it on the real system.

- We do high quality and impactful research in robotics, publishing on the major journals and conferences.

- We often collaborate with other top researchers in europe and worldwide.

- You will have access to a well established laboratory including:

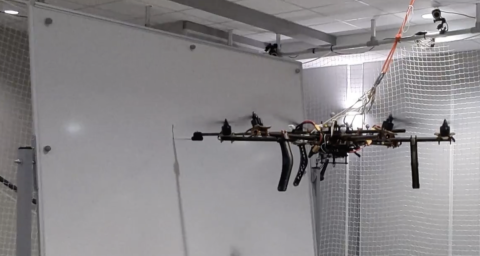

- two flying arenas equipped with motion tracking system, several quadrotors, and a few fully-actuated manipulators,

- one robotic manipulation lab equipped with several robotic arms, like the Franka Emika Panda.

- You will be part of an international and friendly team. We organize several events, from after works, to multi-day lab retreat.

- Regular visits and talks by internationally known researchers from top research labs.

- Excellent written and spoken English skills

- Good experience in C/C++ , ROS, Matlab/Simulink, CAD

- Good experience with numerical trajectory optimization tools for robotics (e.g., use of CaSaDi, Acado, Autodiff, Crocoddyl, etc.)

- Scientific curiosity, large autonomy and ability to work independently

- Experience with robotic systems and/or aerial robots is a plus

Interested candidates are requested to apply via this form. The position will remain open until a satisfactory candidate is found.

In case of positive feedback, you will be contacted. If not positive, you won't hear back.

Applications sent directly by email and not through the web form will not be considered!!